What's in the RedPajama-Data-1T LLM training set

4.6 (486) · $ 15.50 · In stock

RedPajama is “a project to create leading open-source models, starts by reproducing LLaMA training dataset of over 1.2 trillion tokens”. It’s a collaboration between Together, Ontocord.ai, ETH DS3Lab, Stanford CRFM, …

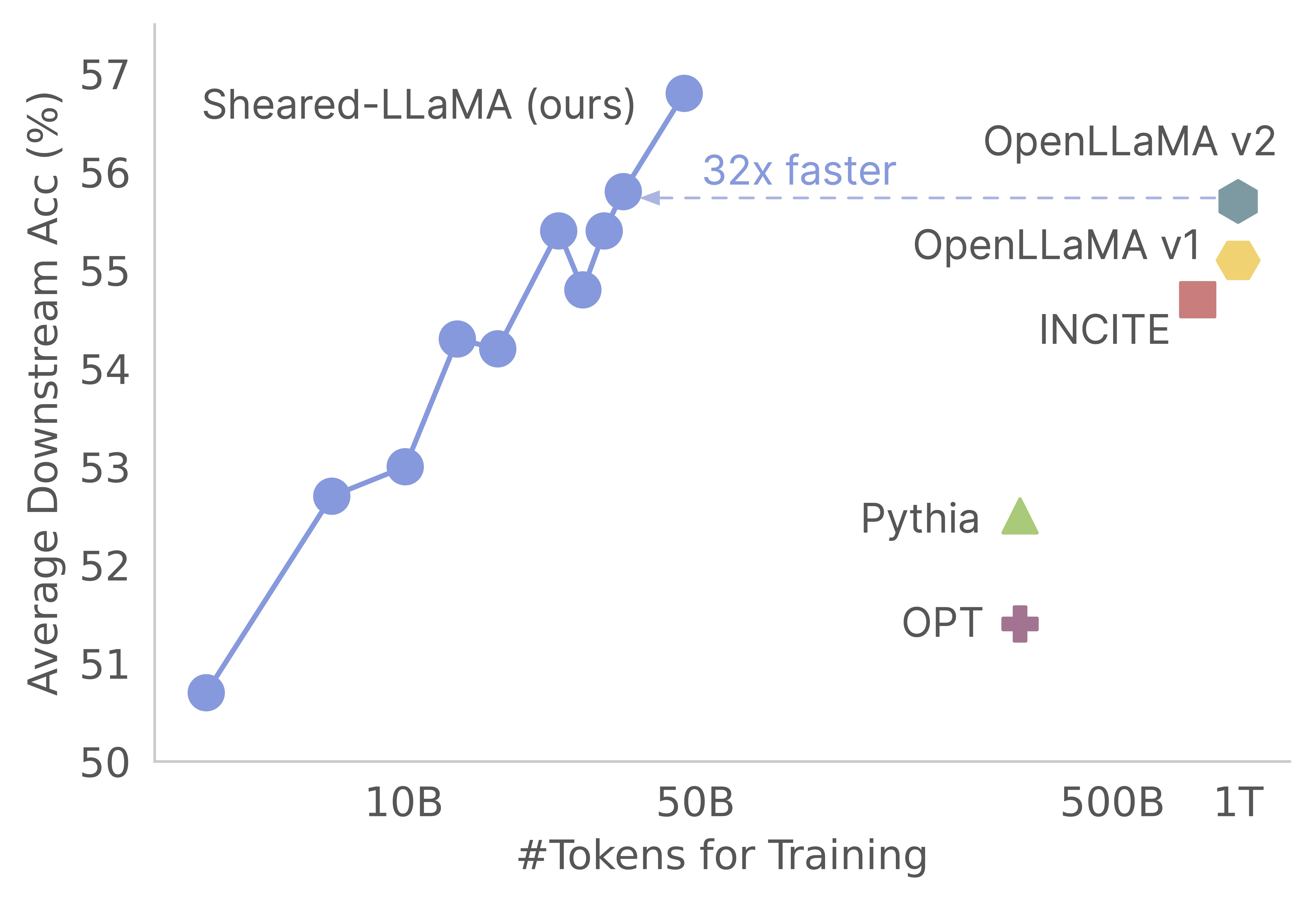

Sheared LLaMA: Accelerating Language Model Pre-training via

Training data used to train LLM models

LaMDA to Red Pajama: How AI's Future Just Got More Exciting!

From ChatGPT to LLaMA to RedPajama: I'm Switching My Interest to

The Latest Open Source LLMs and Datasets

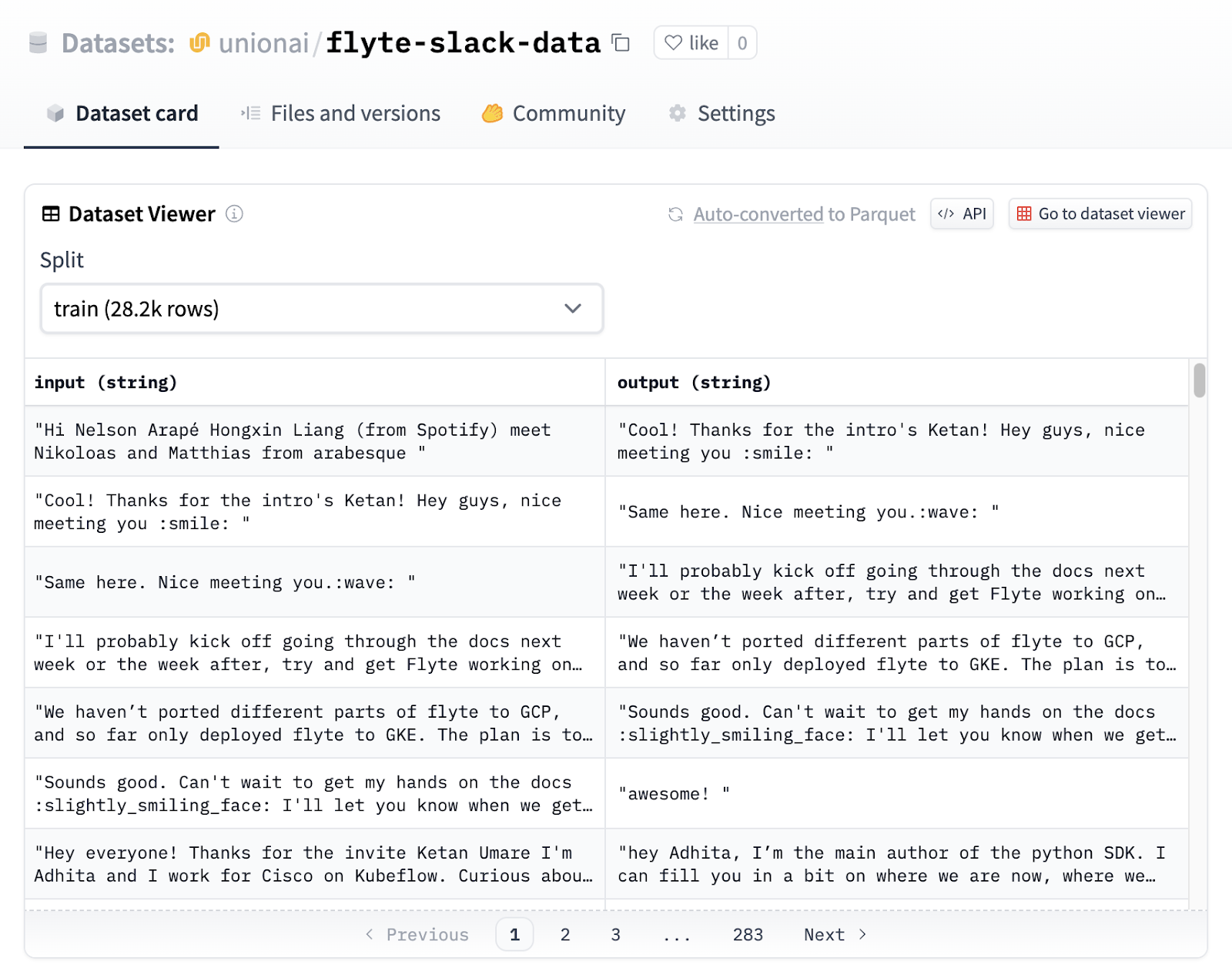

Fine-Tuning Insights: Lessons from Experimenting with RedPajama

RedPajama-Data-v2: An open dataset with 30 trillion tokens for

togethercomputer/RedPajama-Data-V2 · Datasets at Hugging Face

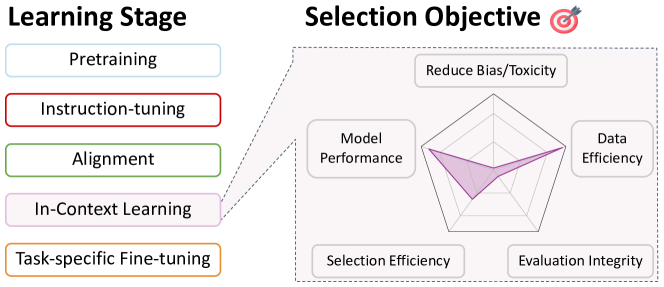

A Survey on Data Selection for Language Models

Finetuning an LLM: RLHF and alternatives (Part I)

Skill it! A Data-Driven Skills Framework for Understanding and

Web LLM runs the vicuna-7b Large Language Model entirely in your

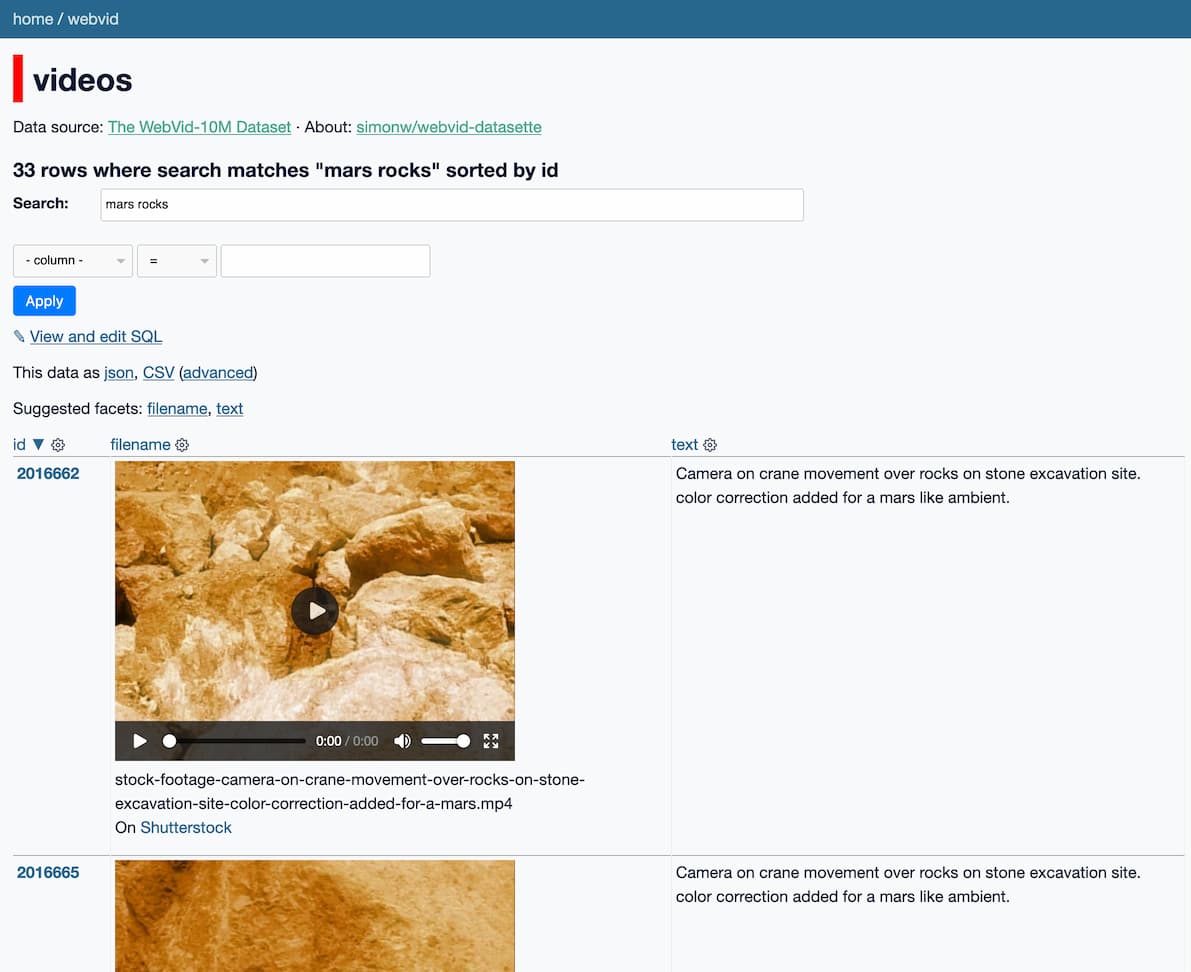

Exploring 10m scraped Shutterstock videos used to train Meta's

![Llama Llama Red Pajama [DVD] - Best Buy](https://pisces.bbystatic.com/image2/BestBuy_US/images/products/3436/34365859_so.jpg)