RAG vs Finetuning - Your Best Approach to Boost LLM Application.

4.6 (114) · $ 10.99 · In stock

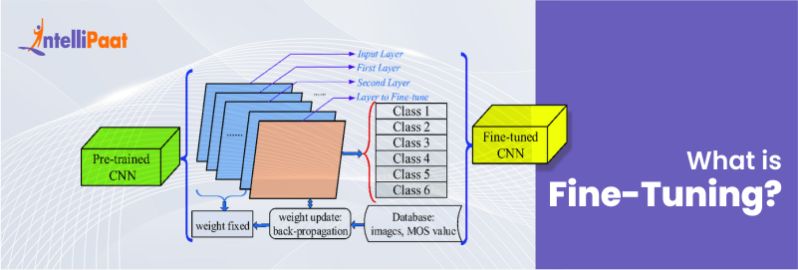

There are two main approaches to improving the performance of large language models (LLMs) on specific tasks: finetuning and retrieval-based generation. Finetuning involves updating the weights of an LLM that has been pre-trained on a large corpus of text and code.

Building a Design System for Ascend

What is RAG? A simple python code with RAG like approach

Real-World AI: LLM Tokenization - Chunking, not Clunking

The Art Of Line Scanning: Part One

The Art Of Line Scanning: Part One

Issue 24: The Algorithms behind the magic

The Power of Embeddings in SEO 🚀

Finetuning LLM

The Power of Embeddings in SEO 🚀

Issue 13: LLM Benchmarking

You may also like